Demystifying the Temperature Parameter: A Visual Guide to Understanding its Role in Large Language

Visualizing Temperature: Simplified explanation of its role in large language models.

A large language model, such as GPT-3.5,has been trained on vast amounts of text data, allowing it to g complex language patterns. Its basic next word predictor capability enables it to suggest the most probable word or phrase that follows a given context, based on the patterns and structures it has learned from its training data.

A new course launched for interview preparation

We have launched a new course “Interview Questions and Answers on Large Language Models (LLMs)” series.

This program is designed to bridge the job gap in the global AI industry. It includes 100+ questions and answers from top companies like FAANG and Fortune 500 & 100+ self-assessment questions.

The course offers regular updates, self-assessment questions, community support, and a comprehensive curriculum covering everything from Prompt Engineering and basics of LLM to Supervised Fine-Tuning (SFT) LLM, Deployment, Hallucination, Evaluation, and Agents etc.

Understand detailed curriculum below (Get 50% off using coupon code MED50 for first 10 users)

You can try our FREE self assessment on LLM (30 MCQs in 30 mins)

Language models are powerful next word predictor. The temperature setting is important because it helps determine how likely the model is to come up with different options for the next word. Most language models have a temperature range between 0 to 1.

you must have read so many of times that temperature 0 means deterministic and 1 means non-deterministic or creative but do you know how?

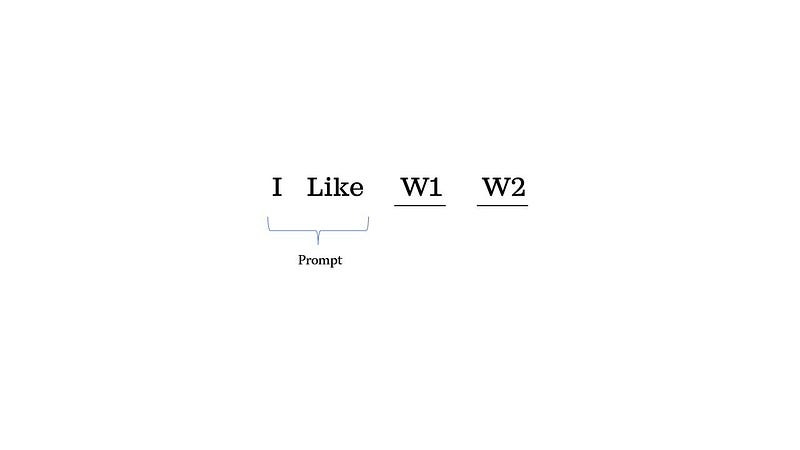

Lets take an example.

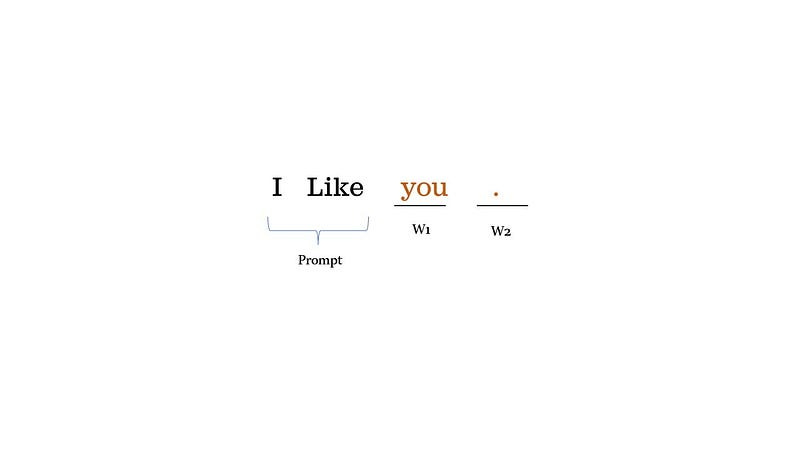

We have given prompt “I Like” to LLM and expecting model to predict next 2 words w1 & w2.

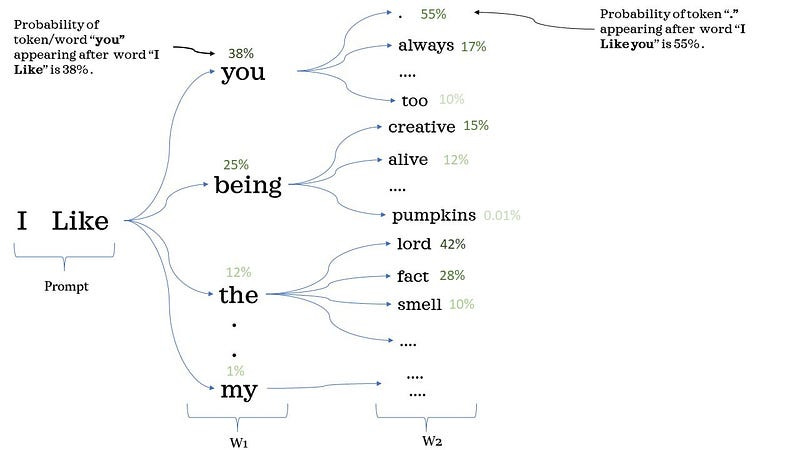

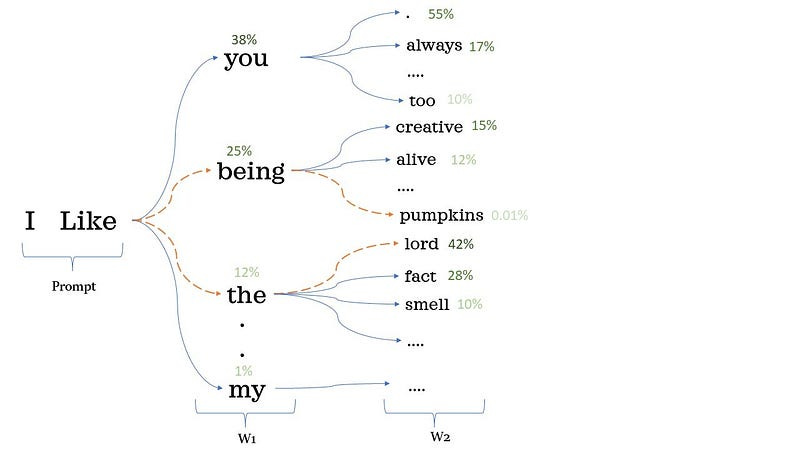

Next possible words for language model given first few words “I Like” with its probability distribution created during training phase.

Probability of token/word “you” appearing after word “I Like” is 38%.

Probability of token “.” appearing after word “I Like you” is 55%

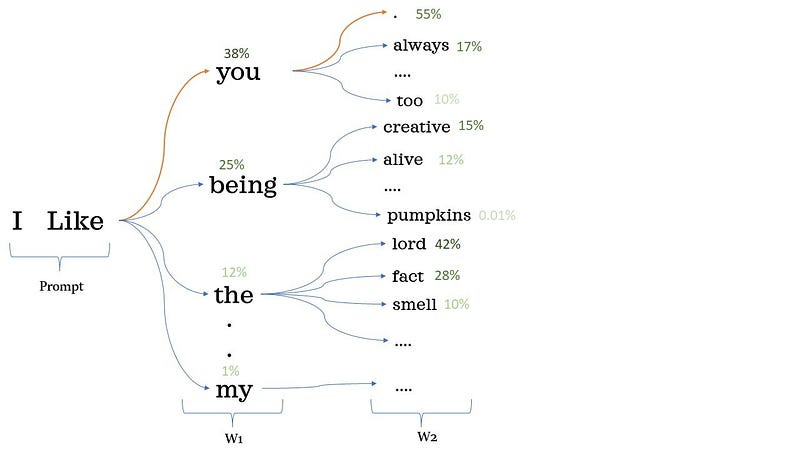

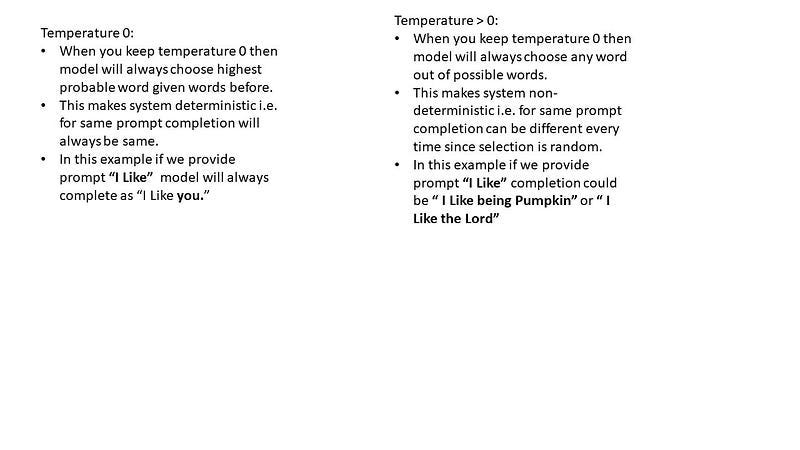

Temperature = 0

If you select temperature 0 during inference model will always select most probable next word which makes its deterministic. with prompt “I Like” completion always be “I Like you .” there is no randomness here while selecting next word.

Temperature > 0

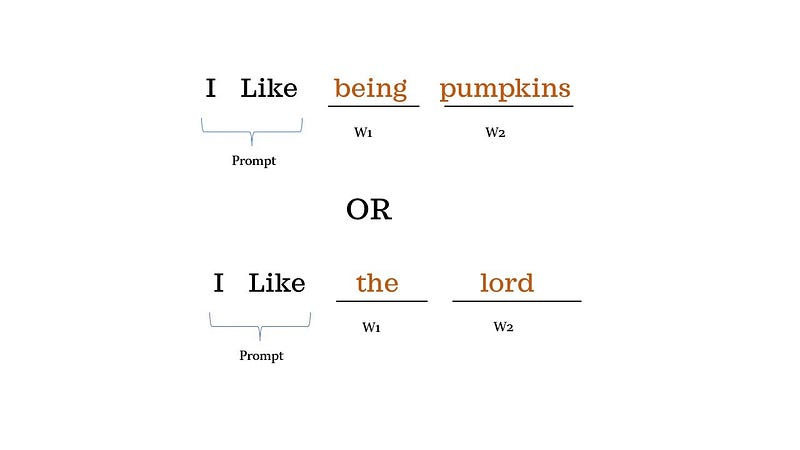

If you select temperature greater than 0 during inference model will randomly selects next word which makes its non-deterministic / creative. with prompt “I Like” completion can be “I Like being pumpkins” OR “I Like the lord”. there is degree of randomness involved in the next word selection.

Slight change in Prompt changes output drastically

Suppose you change prompt from “I Like” to “I Like being”. selection of next possible words changes drastically. this is precise reason we need to version prompt. think of it as hyperparameter in traditional machine learning algorithm where slight change in hyperparameter can change result drastically.

Leave your comments, share and subscribe.